The GLPMA website has been updated recently to include two new documents, a summary of inspection findings from 2014 and a guidance document relating to the use of test sites that have not been inspected by their national GLP monitoring authority. This document has been produced in response to a number of similar themed questions received via the GLP mailbox and at the recent GLP symposium. Both documents can be found at on the MHRA website.

Before I go any further I should introduce myself as I may be unfamiliar to many of you. My name is Martin Reed and I joined the MHRA as a GLP inspector in 2015. At an estimate I would say I have been to approximately 20% of the facilities within the programme so far. I have recently compiled the annual GLP inspection metrics for the period of January to December 2014 and I hope that you find the report useful and it provides an understanding of how your facility’s findings fit with those identified across the wider UK GLP community. Within the next couple of paragraphs I will provide some detail on what we’ve done with the information and also on some of the critical and major deficiencies raised during this period.

In 2014, the UK GLPMA conducted a total of 53 inspections. Three were implementation inspections, one a facility closure inspection and the remaining 49 routine compliance monitoring inspections. This was an increase from the 47 inspections conducted in 2013. Despite the increase in inspections conducted the number of critical and major deficiencies decreased during this period but the overall total of inspection findings was broadly similar (713 in 2013 and 711 in 2014 respectively).

So how do we produce these numbers? After the inspection, inspectors categorise the findings that have been raised and assess the root cause against a series of defined categories and subcategories. For example one category is “Study Conduct” and within that the subcategories including things like SD/PI not discharging duties, multi-site aspects, problems with supporting records and unable to reconstruct study activities. Each category also includes a subcategory of “specific observations” used to capture deficiencies with a root cause that cannot be assigned to any other of the subcategories.

So what do the inspectors do with the numbers once collated?

We’re able to look across the programme and identify trends which can help to drive inspection focus. For those of you involved in multiple inspections over the years you will have seen the change in inspection focus yourself. When we have identified an area of overall weakness, this may prompt production of guidance documents or topics for symposia to help to strengthen industry’s knowledge in the areas our data suggest are causing compliance issues. For example those who attended the symposium in September 2015 will know that one of our hot topics is “data integrity” in relation to computerised systems and that we will soon be publishing our GxP guidance document on this topic. This was highlighted as result of the inspection metrics and it will be interesting to see how the number of deficiencies raised in this area changes within the next few years.

Looking at the 2014 both of the critical deficiencies raised were related to the quality assurance function and concerned insufficient QA monitoring on individual studies. Adequate QA is a crucial part of performing GLP studies and without adequate QA monitoring there isn’t adequate assurance that the study has been conducted in accordance with GLP Principles. Fortunately on both occasions these deficiencies were deemed as isolated incidences and not as being indicative of issues with wider QA programme.

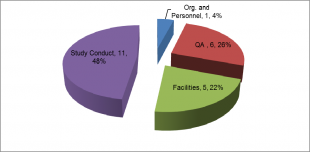

The breakdown of the 2014 majors is shown below:

As in 2012 and 2013 the highest number of major deficiencies was assigned to study conduct. This is to be expected as all deficiencies raised from the review of a study are categorised here. Study conduct major deficiencies were raised due to a range of observations including:

As in 2012 and 2013 the highest number of major deficiencies was assigned to study conduct. This is to be expected as all deficiencies raised from the review of a study are categorised here. Study conduct major deficiencies were raised due to a range of observations including:

- Inappropriate level of study director oversight.

- Inadequate recording of study data and supporting records to reconstruct study activities.

- Use of methods that had not been appropriately validated.

- Reports for studies not being completed within a timely manner.

Although relatively small in number the quality assurance major findings were distributed across the subcategories. Rather than list the subcategories I will leave you to ascertain this from the report.

So how can you use this data?

One of my favourite parts of the job is seeing the diversity of facilities within the programme and how each facility can use the data to benefit them will vary. You could consider looking through the report and having a conversation at your next quality group meeting about how you think you measure up in the areas that generate the most findings. It may be interesting to look at the trends within your own QA audit findings to see how they compare with the trends we see across the UK as a whole. I am aware that most of the people reading this blog will work in quality assurance but I do think it is important that the information published is communicated to all staff involved in maintaining compliance with GLP. Could it feed into training session or prompt review of processes? Could it prompt an SD/PI to consider how they are documenting deviations? Could it prompt an analyst to consider how they have documented analysis?

One of the question I have been asked recently on inspection is how do you select the studies to review? When the master schedule is sent prior to the inspection the inspector will review the master schedule and look at areas of risk. Information assessed includes how many studies are assigned to a particular study director? Are there new study types on the schedule? Are there delays in reporting following completion of the experimental phase? Are there delays in archiving? A large number of studies assigned to a study director does not mean that there is insufficient oversight, but it could lead to an inspector asking the question how is oversight maintained and demonstrated.

Above are just a few suggestions of how the data might prove useful. The advice I have is to think about how you would use the information if you were an inspector as the chances are we will be thinking along similar lines.

As well as the inspection metrics a new guidance document relating to the use of test sites that have not been inspected by their national GLP monitoring authority has recently been uploaded to the GLPMA website. This document was produced in response to a number of similar themed questions received via the GLP mailbox and at the recent GLP symposium.

We will continue to develop how we feedback information to you and are aiming to communicate the 2015 metrics ahead of the next laboratories symposium which is provisionally scheduled for September. We are currently working on the agenda for this event so if you have any comments on the report or suggestions for topics you would like us to present at the symposium please contact the GLP mailbox.

If you have a compliance issue at your site that you need advice to resolve remember you can contact the GLPMA via the mailbox. We can provide advice on your proposed actions and help you make the right decision to avoid a problem that may lead to deficiencies at your next inspection. The mailbox is monitored daily and we try to provide feedback to questions as soon as possible. For guidance on how to submit a query please visit our 'Helping us to help you post' written by my colleague Chris Gray.